Executive Summary: AI-Ready Infrastructure for Life Sciences

Life sciences organizations face unprecedented data challenges as AI reshapes research, diagnostics, and drug discovery. Traditional infrastructure can’t meet the speed, scale, or compliance demands of modern workflows. DDN’s Data Intelligence Platform eliminates latency bottlenecks, unifies data silos, and supports regulatory compliance out of the box—accelerating time to discovery and unlocking new frontiers in biomedical innovation.

Why Traditional Storage Fails for AI-Driven Research

As life sciences organizations push toward AI-driven innovation, they face increasing challenges tied to infrastructure limitations. Genomic sequencing, real-time imaging, and complex clinical trials generate enormous volumes of structured and unstructured data. To derive insight from this data, researchers must run high-performance workloads at scale often under strict compliance mandates and time-to-insight pressures.

Traditional storage systems, originally built for more linear, file-centric workflows, are insufficient for today’s needs. AI training, multimodal data fusion, and cross-disciplinary collaboration demand ultra-low latency, scalable throughput, and seamless data movement between environments. Without the right infrastructure, organizations experience delays in discovery, inconsistent model performance, and growing technical debt.

Accelerating Genomics and Imaging with High-Performance Data Infrastructure

Genomics and imaging workloads present two fundamental infrastructure problems: performance and parallelism. Instruments like next-generation sequencers and cryo-electron microscopes can generate terabytes of data per session. For these assets to yield scientific value, that data must be processed and analyzed with minimal delay.

Storage latency becomes a critical bottleneck in these environments. Whether running alignment algorithms, checkpointing model iterations, or staging datasets for inference, any delay in I/O slows time-to-insight. DDN addresses this with a Lustre-based file system, EXAScaler® which is tuned specifically for HPC and AI workloads. It reduces latency by up to 40% in key operations like metadata lookups and I/O-intensive reads and writes.

This is especially impactful in genomics workflows where pipelines like GATK, DeepVariant, and BWA require fast and parallel access to data sets that can span petabytes. In imaging-heavy environments, such as electron microscopy and histopathology, where data fidelity is critical, DDN supports real-time ingestion and analytics without compromising throughput.

Result: Reduced lag between data acquisition and analysis, enabling faster iteration and accelerating early-stage research and clinical trials.

AI-Optimized Workflows for Drug Discovery and Diagnostics

AI is reshaping the way life sciences organizations approach drug discovery and diagnostics. From large language models trained on molecular datasets to generative AI used for de novo compound synthesis, modern research requires infrastructure that can support compute-intensive, data-rich workflows.

A key limitation in many environments is inefficient data staging. AI models are often GPU-bound due to storage systems that cannot feed them data quickly enough, leading to underutilized infrastructure and extended training times.

DDN’s platform addresses this by automating the movement of data between ingestion, training, and inference stages. It delivers high-throughput object and file-based access with support for modern AI pipelines, including PyTorch DataLoader, NVIDIA NeMo, and Ray. Integrated data workflow automation minimizes manual data engineering overhead, enabling AI/ML teams to focus on model performance, not infrastructure constraints.

In addition, Infinia improves performance metrics such as precision, recall, and AUC by ensuring consistent, high-speed access to the diverse datasets required for AI development in diagnostics and therapeutic research.

Result: Up to 30% improvement in GPU utilization and significantly reduced training time for AI models targeting biomarker identification, protein folding, and personalized treatment planning.

Managing Petabyte-Scale Genomics and Imaging Data Across the Enterprise

Modern research organizations operate in environments where data is generated and consumed across multiple domains—genomics, imaging, bioinformatics, clinical operations. These data streams often reside in silos, stored using disparate protocols, and indexed inconsistently. As a result, scientists are forced to spend time extracting, transforming, and preparing data rather than analyzing it.

DDN enables unified data environments by supporting a scalable, credential-based data lake architecture that ingests structured, semi-structured, and unstructured data into a single, accessible namespace. This includes compatibility with legacy datasets as well as real-time ingest from sequencing devices, EMRs, imaging platforms, and lab instruments.

The system supports native anonymization and policy-driven access controls, allowing secure sharing across departments while complying with data privacy regulations. Metadata indexing and tagging allow for rapid query and retrieval, making cross-modal data analysis practical and efficient.

One of the top five global pharmaceutical companies adopted DDN to accelerate protein structure analysis pipelines. With our low-latency data platform, they reduced model training time for molecular classification by over 25%, enabling faster iterations in their drug development pipeline.

Result: A unified platform for researchers to access comprehensive, cross-functional datasets, improving both collaboration and the statistical power of analysis.

Built-In Compliance for Biomedical and Clinical Workloads

In life sciences, compliance is a constant operational requirement. Organizations must protect clinical and research data under regulatory mandates such as HIPAA, GDPR, and 21 CFR Part 11. This includes maintaining immutable audit trails, access logs, encryption, and verifiable data lineage.

Many storage environments lack native compliance controls and rely on external toolchains to enforce governance, increasing complexity and risk.

DDN’s Security and Compliance Layer provides built-in capabilities that meet regulatory expectations without compromising system performance. This includes real-time encryption, fine-grained RBAC, immutable object lock, multi-tenant access control, and automated logging and reporting.

The system also enables zero-trust architectures through identity federation and supports secure multi-cloud environments for research collaboration without compromising auditability.

Result: Reduced risk exposure, improved data governance, and the ability to meet audit and reporting requirements with minimal manual intervention.

Future-Proof, Scalable Infrastructure for Life Sciences

As research priorities evolve and datasets grow, life sciences organizations must ensure that infrastructure investments today do not become limitations tomorrow. Support for hybrid deployments, AI-native workflows, and protocol-agnostic storage are now essential features.

| Feature | DDN Infinia + EXAScaler | AWS FSx | NetApp ONTAP |

| Latency | Sub-millisecond latency; 22x faster object listing vs. AWS S3 | Higher latency for metadata-heavy and small-file I/O | Moderate latency; better for traditional workloads |

| GPU Utilization | Up to 30% improvement in GPU efficiency | Often GPU-bound due to slower storage | Limited GPU optimization |

| Compliance Support | Native HIPAA, GDPR, and 21 CFR Part 11 support | Requires third-party tools for full compliance | Basic built-in controls, external tooling recommended |

| Workflow Integration | Optimized for NeMo, NIM, Ray, RAG, PyTorch DataLoader | Limited native integration with AI frameworks | Compatible with Kubernetes and ML tooling |

| Scalability & Flexibility | Hybrid, multi-cloud, and on-prem; supports S3, POSIX, SQL | Cloud-native only; limited on-prem integration | Strong hybrid support but less protocol flexibility |

| Search and Metadata | 600x faster metadata search/listing vs. AWS | Basic metadata handling | Moderate metadata handling |

| Architecture | Modular, software-defined, multi-tenant, high-performance | Proprietary infrastructure, less flexible | Hardware-dependent features limit adaptability |

| TCO & Efficiency | 10x power/cooling efficiency, lower idle GPU time, reduced infra costs | Pay-as-you-go but may lead to overuse and hidden costs | Higher licensing and hardware costs at scale |

DDN’s architecture is modular, scale-out, and supports S3, NFS, CSI, POSIX, and SQL interfaces. It enables seamless extension to public cloud environments (e.g., AWS, Azure, GCP) or sovereign clouds as required for data residency and collaboration.

Workflows can scale from lab-level experiments to enterprise-wide AI platforms without re-engineering pipelines or migrating data. Existing applications can access storage using native protocols, reducing the need for custom integration.

Result: Sustained research agility with infrastructure that scales seamlessly with compute, data volume, and analytical complexity.

Unifying AI Workflows to Break Down Research Silos

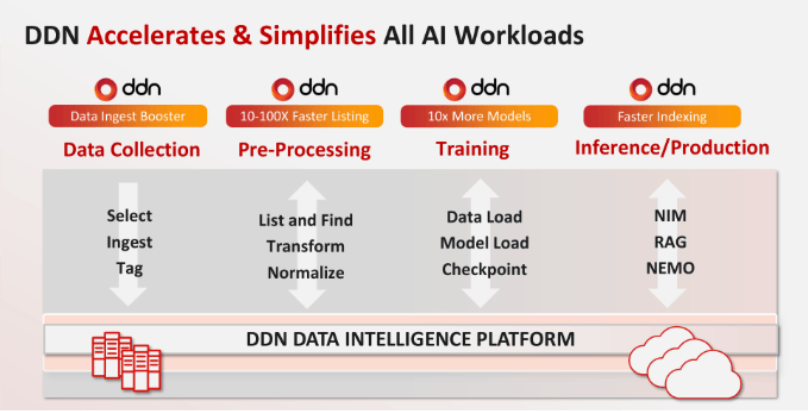

A significant inhibitor to operationalizing AI in life sciences is the fragmented nature of data pipelines. Training data often resides in one location, while inference workloads and analytics run in another. Workflow orchestration becomes difficult, and integrating new AI tooling adds additional overhead.

DDN provides an abstraction layer that unifies data ingestion, labeling, training, inference, and visualization workflows across the enterprise. It supports common AI and ML tools, integrates with frameworks such as RAG and vector search, and centralizes metadata management.

This unification allows organizations to adopt new AI models and tools without restructuring their data estate. Researchers can develop and test AI models on the same platform where they are deployed, ensuring consistency and reducing time from development to application.

Result: Integrated, end-to-end research pipelines that support rapid iteration, easier onboarding of new tools, and improved model reproducibility.

Conclusion: Operationalizing AI for Faster Discovery in Life Sciences

Life sciences organizations that aim to lead in discovery, diagnostics, and treatment innovation cannot afford to be limited by outdated infrastructure. Achieving results at scale requires more than performance, it requires architectural alignment with the data lifecycle, AI readiness, and regulatory compliance.

DDN delivers on this need with a unified approach to data management, storage, and AI enablement. By reducing latency, scaling throughput, unifying workflows, and securing compliance from day one, DDN helps life sciences organizations operationalize AI at scale and accelerate their time-to-discovery.

This architecture aligns storage, AI, and analytics for research environments with the highest demands for performance, compliance, and future scalability.

To learn more about how DDN can support your research workflows and AI initiatives, contact our solutions team for a tailored briefing.

AI-optimized infrastructure refers to high-performance data platforms that handle genomics, imaging, and bioinformatics workloads at scale. DDN’s Data Intelligence Platform ensures low latency, regulatory compliance, and seamless AI integration.

By reducing storage latency up to 40% and enabling parallel, high-throughput access to datasets, DDN accelerates genomics pipelines (GATK, BWA, DeepVariant) and real-time imaging analytics.

Life sciences organizations must comply with HIPAA, GDPR, and 21 CFR Part 11. DDN provides built-in encryption, RBAC, immutable audit trails, and automated reporting to simplify compliance.

DDN increases GPU utilization by up to 30% by ensuring high-speed data delivery to training and inference pipelines, reducing training time for protein folding, biomarker discovery, and diagnostics.

Yes. DDN supports hybrid, multi-cloud, and sovereign deployments with protocol-agnostic interfaces, enabling scalability from lab experiments to enterprise AI platforms.