In the era of data intelligence, enterprises need AI infrastructure solutions that unify disparate data, deliver exceptional performance, and scale cost-effectively across any environment. The partnership between Oracle Cloud Infrastructure (OCI) and DDN Infinia delivers a cloud-native AI storage solution that empowers organizations to drive end-to-end AI—from data preparation to model training, analytics, and real-time inference like retrieval-augmented generation (RAG). A recent Proof of Concept (POC) showcases how DDN Infinia, running on OCI’s high-performance compute, simplifies storage for AI, accelerates innovation, and reduces costs, making it a trusted choice for industry leaders.

Simplifying AI Workloads with a Unified Data Platform

Modern AI workloads—spanning data preparation, analytics, model loading, and inference—require AI storage that handles structured, semi-structured, and unstructured data with high concurrency and low latency. Traditional storage solutions often create silos, slowing GPU utilization and increasing complexity. DDN Infinia, a software-defined, S3-compatible KV store, eliminates these challenges by offering:

- Unified Data Access: Connects multi-modal data across environments, breaking silos for seamless global infrastructure.

- Extreme Performance: Delivering Ultra-low latency, high throughput, and millions of IOPS (Obj/sec), ensuring GPUs remain fully utilized.

- Massive Scalability: Supports exabyte-scale datasets and 200,000+ GPUs, ideal for large-scale AI infrastructure.

- Native Multi-Tenancy: Provides dynamic workload isolation with quality of service (QoS) and dynamic scaling, enabling secure, efficient resource sharing.

By integrating with OCI’s high-performance compute and 100 GbE networking, Infinia empowers organizations to accelerate AI-driven outcomes, such as real-time fraud detection in finance or faster content delivery in media.

POC Architecture: Built for Scalability and Reliability

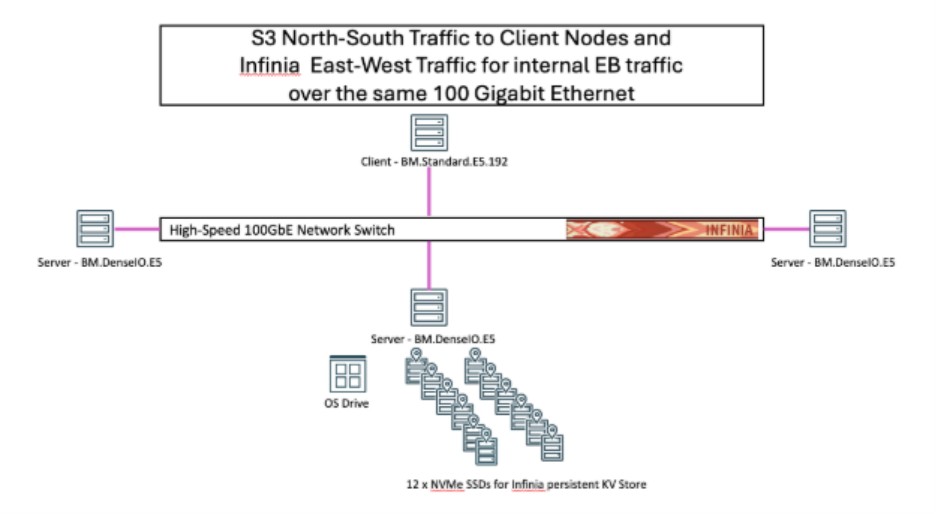

The Oracle-DDN POC demonstrated Infinia’s ability to deliver scalable, high-performance S3 workloads for AI/ML, media, and HPC applications on OCI bare metal servers. The architecture included:

- Server Nodes: Six OCI BM.DenseIO.E5 instances, each with 2× AMD EPYC 9J14 CPUs (128 OCPUs), 1.5 TB RAM, 12× 6.8 TB NVMe SSDs, and a 100 GbE interface, forming a logical storage cluster with ~450 TB of usable capacity. Features like erasure coding and metadata indexing ensured 99.999% uptime and uninterrupted access.

- Client Nodes: Six OCI BM.Standard.E5.192 instances, each with 2× 96-core AMD EPYC CPUs (192 OCPUs), 2.2 TB RAM, and a 100 GbE interface, simulating AI applications via S3 protocol.

This setup, deployable in just 10 minutes, supports high availability and automated maintenance, making it ideal for AI infrastructure solutions. For example, enterprises can rely on this architecture for predictive analytics in retail, ensuring consistent performance under high-concurrency workloads.

Benchmark Results: Unparalleled Performance for AI that Scales

The POC evaluated DDN Infinia’s S3 performance on OCI, focusing on object-per-second (Obj/sec) rates, throughput, latency, and Time-to-First-Byte (TTFB) using tools like Warp, AWS CLI, and s5cmd to emulate real-world AI workloads.

Aggregate Throughput:

- Warp: Achieved 10.6 GiB/s, approaching the 100 GbE NIC’s theoretical limit of 11.641 GiB/s, showcasing Infinia’s ability to maximize network performance for storage for AI.

- s5cmd cp: Delivered 4.7 GiB/s, leveraging Go’s concurrency for efficient transfers.

- AWS CLI cp: Recorded 2.2 GiB/s, demonstrating robust performance despite Python’s lower parallelism.

Key Performance Highlights

| S3 Operation | Obj/sec | Throughput | Latency |

| PUT | 52 K/s | 27.6 GiB/s | 4 milli-seconds |

| GET | 225 K/s | 34.6 GiB/s | 1.7 milli-seconds |

| S3 Metadata Operation | Obj/sec |

| LIST | 194 K/s |

| STAT | 345 K/s |

| Time-to-First-Byte | 5 milli-seconds |

Table 1: Aggregate DDN Infinia S3 performance on OCI across the six BM.DenseIO.E5

| S3 Tool/Benchmark | Single S3 Client to Single S3 Server Throughput |

| warp benchmark | 10.6 GiB/s |

| AWS CLI cp | 2.2 GiB/s |

| s5cmd cp | 4.7 GiB/s |

Table 2: Single S3 Client to Single S3 Server DDN Infinia throughput on OCI

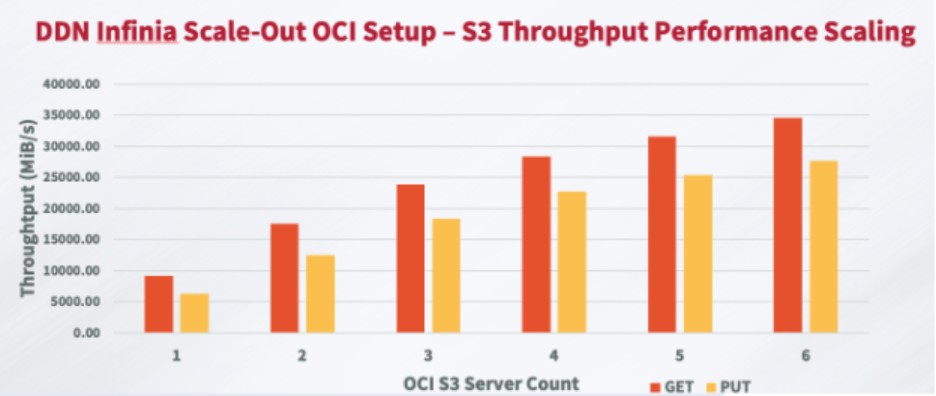

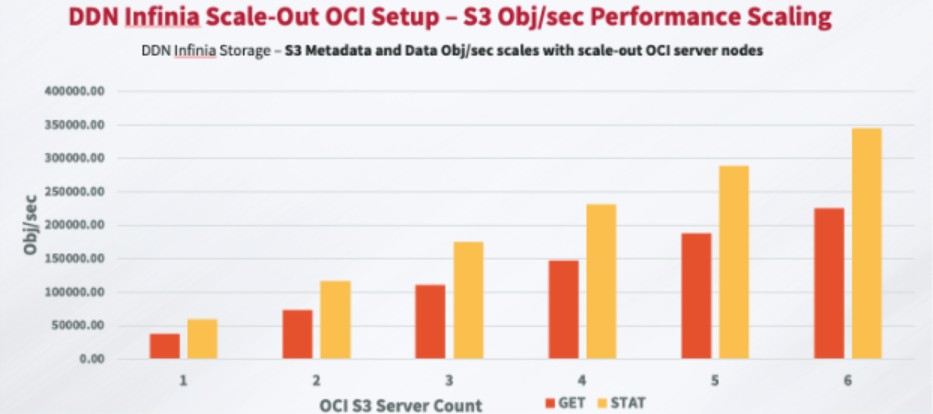

On OCI, DDN Infinia consistently delivered: Sustained High Obj/sec (IOPS), Sustained High Throughput, Low latency and TTFB in the lower single-digit milli-seconds. In addition, the Storage performance scales with scale-out OCI server node counts.

- Scalability: Throughput scaled linearly with additional OCI server nodes, with each node sustaining ~4.6 GiB/s (PUT) and ~5.7 GiB/s (GET). Metadata performance scaled to millions of Obj/sec, supporting thousands of tags per object.

- Low Latency and TTFB: Achieved single-digit millisecond latency and TTFB, 25x better than AWS S3, enabling faster AI token generation and content access for edge/CDN caching.

DDN Infinia’s S3 Metadata and Data Obj/sec performance scales with scale-out OCI server nodes (Figure 4). The aggregate S3 metadata and data Obj/sec performance is balanced across the participating scale-out OCI server nodes.

These metrics, combined with Infinia’s integration with AI frameworks like NeMo, TensorFlow, and Spark, ensure faster model training and inference, reducing time-to-insight. For instance, healthcare organizations can accelerate medical imaging analysis, improving diagnostic speed.

With native multi-tenancy, namespace isolation, and millions of tags, Infinia on OCI allows teams to manage AI datasets intelligently — supporting shared infrastructure without added complexity.

Running on OCI’s dense bare metal compute and 100 GbE network fabric, Infinia takes full advantage of Oracle’s infrastructure — delivering consistent, stable, reliable performance at scale. The joint POC between Oracle and DDN confirmed that DDN Infinia on OCI delivers linear scalability, high S3 throughput, ultra-low latency, and massive concurrency.

Business Value and Use Cases

The OCI-DDN Infinia solution delivers measurable outcomes for AI infrastructure:

- Faster Innovation and More Responsive Systems: Eliminates I/O bottlenecks, reducing training and inference times for applications like fraud detection (financial services) or personalized recommendations (retail). DDN Infinia’s lower latency and TTFB values enables faster access to the S3 objects enabling faster AI token generation as well as faster access to contents for streaming providers in the edge and CDN caching devices (to alleviate burst of user requests).

- Cost Efficiency: Ensures that GPUs and CPUs on OCI remain fully utilized with sustained high-performance object access coupled with the elimination of I/O bottlenecks, translating to lower cost per inference. The OCI BM.DenseIO.E5 shape delivers the most cost-effective* NVMe storage, offering the lowest cost per TB and sustaining cost-efficiency as capacity requirements grow.

- Reliability and Security: Offers 99.999% uptime with fault-domain-aware erasure coding and granular access controls, ensuring data sovereignty and compliance.

- Multitenant Flexibility: Native multitenancy supports shared infrastructure for diverse workloads, such as RAG and streaming, without performance degradation.

Why Choose OCI and DDN Infinia for AI?

The OCI-DDN Infinia solution combines OCI’s high-performance compute with Infinia’s software-defined AI storage, delivering a unified platform for any data, application, or environment. Unlike competitors requiring manual tuning or lagging in metadata operations, Infinia offers:

- Accelerated Performance: low latency and optimized for OCI’s 100 GbE fabric.

- Scalable Multi-Tenancy: Supports thousands of tenants with automated SLA-driven allocation, ensuring secure, efficient AI infrastructure solutions.

- Future-Ready Design: Integrates with NVIDIA AI Enterprise (NeMo, NIM, CUDA) and supports Blackwell and Spectrum-X, trusted by AI storage companies like NVIDIA for over eight years.

Get Started with DDN Infinia on OCI

Ready to unify your data and accelerate AI innovation?

Explore how DDN Infinia on Oracle Cloud Infrastructure delivers high-performance, scalable storage for AI workloads—from model prep to inference.

- Visit the Oracle partner page our website to learn more or connect directly with the Oracle team at DDN for a personalized consultation.

- To request a proof of concept, contact Pinkesh Valdria at Oracle and experience the power of Infinia in action.

- Stay up to date with the latest AI storage insights—follow DDN on LinkedIn and X.

Infinia unifies multi-modal data (video, audio, text) across cloud and core, with edge support planned for 2025, streamlining data management and reducing complexity for AI infrastructure.

Infinia delivers high throughput, low latency (25x better than AWS S3), and supports 200,000+ GPUs, accelerating AI workloads like RAG and inference.

Finance (real-time fraud detection), healthcare (medical imaging), and media (content streaming) leverage Infinia’s low-latency S3 access for faster, reliable outcomes.

Infinia ensures that GPUs and CPUs on OCI remain fully utilized with sustained high-performance object access coupled with the elimination of I/O bottlenecks, translating to lower cost per inference. The OCI BM.DenseIO.E5 shape delivers the most cost-effective NVMe storage, offering the lowest cost per TB and sustaining cost-efficiency as capacity requirements grow.

Trusted by NVIDIA and xAI, Infinia’s software-defined platform offers unmatched scalability, security, and multi-tenancy, with seamless integration for NVIDIA AI Enterprise tools. Read about customer success stories on the DDN customer webpage.