We’ve recently seen a storage vendor trumpeting a modest installation and proudly comparing their performance favourably to a 4 year-old HDD-based system. Quite amazing that they managed only to get 30% more performance whilst using 100% flash (which offers 15x more BW per device and 5000x more IOPS). The real question is, how can it be so slow? You’ll find the answer below.

DDN on the other hand has been shipping the largest All Flash Systems for the largest AI Supercomputers in the world this month. We show 30X performance per rack unit compared to these NFS based systems.

And there is talk of EXAScale too…

What does EXAScale really mean when it comes to storage? I presented on this topic recently and I claim that it comes down to efficiency and robustness at scale. Datacenters are usually power limited and storage can be a maker or breaker of total datacenter productivity and efficiency. Look at this short quote from the second fastest production supercomputer storage system in the world:

“The Leonardo supercomputer delivers game-changing AI and HPC performance for the betterment of European research. This kind of power needs an extremely well-optimized storage environment to gain maximum efficiency,” said Mirko Cestari, HPC and Cloud technology coordinator at CINECA. “We chose DDN because of its ability to accelerate all stages of the AI and HPC workloads lifecycle.” – Source

- Extremely well-optimized storage environment to gain maximum efficiency

The storage is driving efficiencies through the entire datacentre. Applications waiting on storage burn energy and limit productivity - Ability to accelerate all stages of the AI and HPC workloads lifecycle.

That means ingest, preparation, deep learning, checkpointing, post-processing, etc etc and needs the full spectrum of IO patterns to be served well.

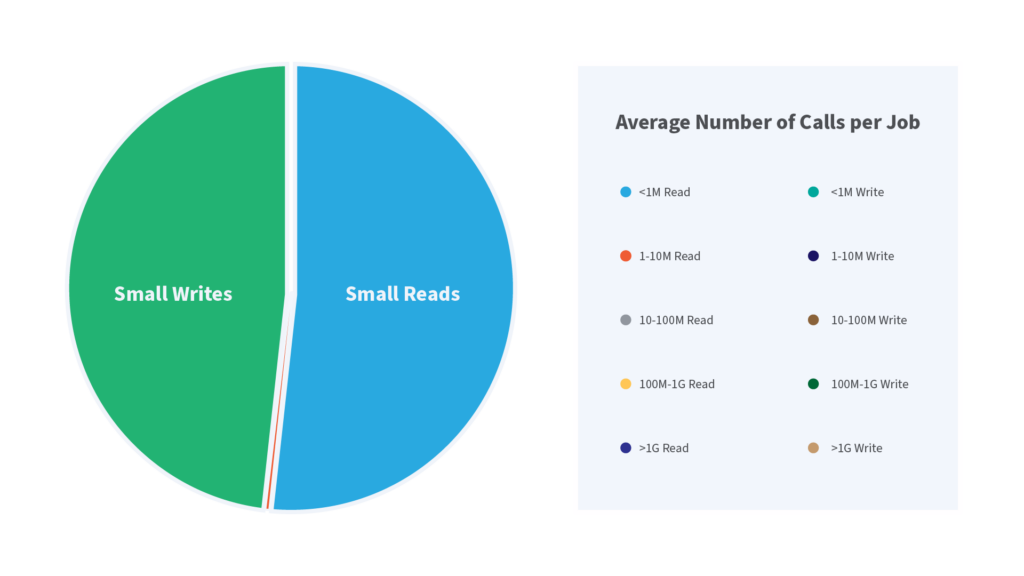

Let’s look at what applications really do on large scale supercomputers. A recent paper used Darshan to characterize the IO patterns of over 23,000 machine learning jobs across 9 disciplines. It’s interesting to note that write performance is just as important as read performance, and also the vast majority of IO calls are sub 1 MB. This calls for a storage system which demonstrates both strong IOPs and balanced read and write capabilities as well as other attributes. And the larger the system (think EXAScale) the more critical these capabilities become.

This is underlined by NVIDIA’s Prethvi Kashinkunti, Senior Data Center Systems Engineer discussing their process of working with DDN:

“Having the storage technology that can provide the appropriate amount of bandwidth both for reads and for writes is critical to make sure we maintain efficiency”

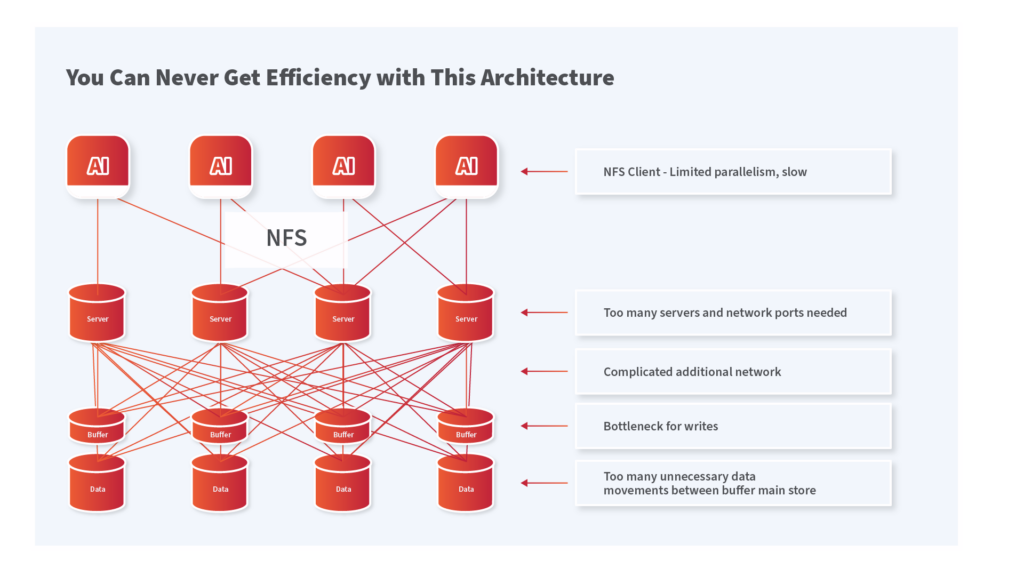

Delivering the hardware capabilities of modern devices into applications is the key. Today’s NFS/QLC architectures do not deliver due to inherent inefficiencies in the architecture.

By bottlenecking write IO into a limited set of devices, and by requiring all writes to be tiered and subsequently collected and rewritten to QLC flash means that both efficiency is halved and the writes are very low. How low? Below is an example of a DDN and an NFS/QLC system performing 800GB/s of write performance.

It’s the same story for IOPS. DDN offers 30X more IOPS per Rack Unit compared to NFS/QLC alternatives. DDN manages to expose to applications the underlying hardware device performance by enabling a full parallel data path from client to data with no unnecessary data movements. The DDN intelligent client enables scaling today to the very largest of compute systems.

Up until recently your only choices for QLC storage failed massively on IOPS, throughput, metadata and latency, wasting your productivity and datacenter energy. DDN’s new parallel scale out platform with QLC changes all that delivering full DDN parallel performance to ensure AI and HPC infrastructure hits the highest efficiencies and without the additional complexity of scale out NAS. High Performance QLC starts NOW!