By Isabella Richard, Product Marketing Manager

AI is now essential for enterprises seeking a competitive edge. Scaling complex workloads requires more than powerful GPUs, it demands a complete data infrastructure that ensures timely, governed access to the right data without bottlenecks.

DDN, in collaboration with NVIDIA, developed the first-ever validated reference architecture for NVIDIA Cloud Partners (NCPs) that provides a blueprint for service providers to develop a proven foundation for delivering next-generation AI services. This blueprint integrates NVIDIA HGX systems with the DDN Data Intelligence Platform, which is powered by Infinia and EXAScaler®. The architecture is designed to accelerate every stage of the AI lifecycle, from data ingestion and preparation to model training, checkpointing and inference, while meeting the highest standards for enterprise reliability and security.

The AI Infrastructure Challenge – DDN’s Take

The AI service provider market is expanding rapidly alongside increasing customer expectations. Enterprise AI clients need consistent high performance, strong security, and the ability to integrate seamlessly into hybrid and multi-cloud workflows.

Hyperscale AI workloads often stall due to storage latency, metadata overhead, and limited throughput. EXAScaler® serves as the reference architecture’s high-performance backbone for training and checkpointing. It also powers NVIDIA’s internal supercomputers, delivering up to 30X better performance and 15X lower power usage.

These are the exact challenges the DDN + NVIDIA reference architecture was built to solve for AI cloud providers at scale.

What’s in the Reference Architecture?

This validated reference architecture, co-developed by DDN and NVIDIA, defines the gold standard for building production-grade AI services at scale. It includes:

- NVIDIA HGX compute with Magnum IO: Delivers maximum GPU utilization and ultra-fast data movement for large-scale AI training and inference.

- DDN EXAScaler® parallel file system: EXAScaler® is the high-performance storage backbone for AI model training and checkpointing, feeding GPUs efficiently in AI factories built on NVIDIA HGX.

- DDN Data Services: DDN S3 Data Services provide hybrid file and object data access to the shared namespace. The multi-protocol access to the unified namespace provides tremendous workflow flexibility and simple end-to-end integration. The DDN S3 Data Services architecture delivers robust performance, scalability, security, and reliability features.

- DDN Multi-Tenancy: DDN makes it very simple to operate a secure multitenant environment at-scale through its native client and comprehensive digital security framework, making it simple to share HGX systems across a large pool of users and still maintain secure data segregation. Multitenancy provides quick, seamless, dynamic HGX system resource provisioning for users that eliminates resource silos, complex software release management, and unnecessary data movement between data storage locations.

- Integrated support for GPUDirect, NeMo, RAG, NIM, and multi-cloud workflows: Ensures NCPs can launch modern AI services that scale seamlessly across hybrid and multi-tenant environments.

Together, these components form a unified, field-proven blueprint that enables providers to deliver AI services at scale with confidence.

Why This Reference Architecture Works for Modern AI

The blueprint solves AI infrastructure challenges with scalable object storage, integrated metadata services, and high-throughput data access to keep GPUs fully utilized. For providers, this means the ability to stand up production-scale AI clouds faster, integrate seamlessly into multi-tenant and hybrid environments, and lower total cost of ownership by maximizing GPU efficiency and minimizing infrastructure waste. Together, these capabilities create an environment where AI services run at full speed, even as datasets grow and workloads become more complex.

Say Goodbye to Idle GPUs

For AI services to be profitable at scale, GPU resources must be kept fully active. A central goal of the reference architecture is to eliminate idle GPUs, the #1 cost drain in AI services. Integration with NVIDIA Magnum IO GPUDirect Storage enables direct transfers from storage to GPU memory, cutting load and checkpoint times by 15X.These performance gains mean faster model training cycles, quicker iterations, and reduced infrastructure costs per workload.

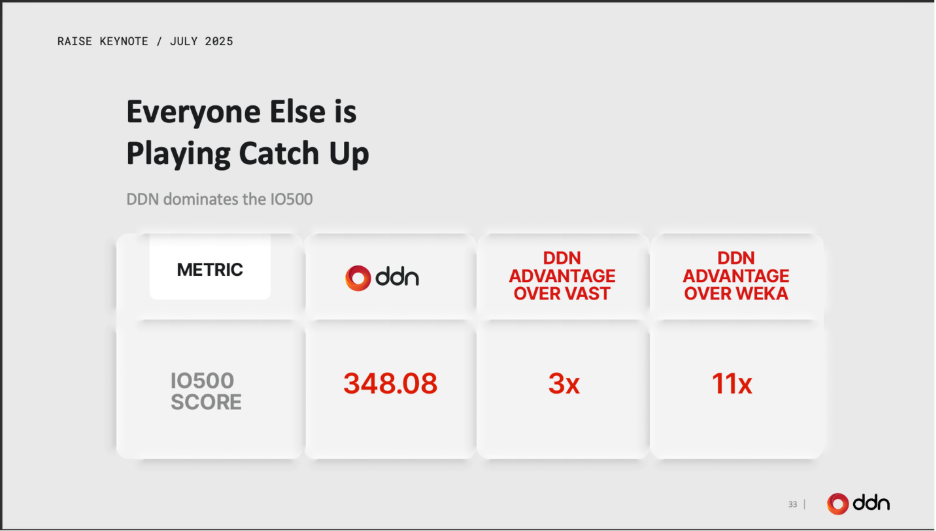

These benchmark results demonstrate how the reference architecture performs under real-world AI workloads. On the IO500 benchmark, DDN holds 7 of the top 10 production scores, delivering 3X to 11X more real-world AI/HPC value than competitors. This is direct proof that service providers can scale confidently and predictably, even under intense, distributed GPU demand.

Innovating the AI Services Portfolio for Enterprise Demand

With this reference architecture, service providers can move beyond basic AI hosting to deliver value-added services that strengthen customer relationships and create recurring revenue opportunities.

One example is enterprise-scale disaster recovery for AI workloads. The platform supports real-time replication of large datasets across hybrid environments, enabling providers to offer business continuity solutions for mission-critical AI applications. This is especially valuable for regulated industries where data resilience is a requirement.

Another area of growth is retrieval-augmented generation. The architecture integrates metadata-rich object storage integrates with NVIDIA’s advanced AI frameworks to support RAG services that combine generative AI models with proprietary datasets. This allows customers to deploy tailored AI assistants, search tools, and knowledge systems that are specific to their business needs.

Safeguarding AI Workloads with Enterprise Data Controls

Data security and governance are integral for NCPs operating in regulated environments. The reference architecture provides end-to-end controls that ensure data privacy, immutability, and compliance.

For service providers, these capabilities mean they can meet the stringent requirements of industries such as finance, healthcare, and automotive, while still delivering the high performance those workloads demand.

Proven at Scale in Production AI Environments

The DDN + NVIDIA reference architecture is powering some of the most demanding AI environments in production today. A leading hedge fund accelerated algorithm development by 3X and cut fraud detection latency by 70%, saving millions in infrastructure costs. TGen reduced genomics pipeline times from 12 hours to under 2, boosting productivity while lowering compute costs by 80%. Supercomputing centers like CINECA and Helmholtz Munich rely on DDN to drive unprecedented GPU utilization at scale. Read some of our customer success stories here to learn more.

In life sciences, the DDN Data Intelligence Platform accelerates genomics workflows by enabling the rapid processing of multi-terabyte datasets, reducing time to discovery. In financial services, it supports real-time AI analytics for fraud detection and risk management, where speed and reliability are nonnegotiable. These real-world deployments prove the platform’s ability to perform consistently, scale effectively, and integrate into complex operational environments.

Built for the Future of AI

This reference architecture, certified by both DDN and NVIDIA, delivers an integrated, future-ready infrastructure that evolves with the needs of growing AI workloads. Whether onboarding new customers, scaling for a massive training run, or expanding into new regions, performance remains predictable and efficiency uncompromised. This blueprint empowers providers to deliver scalable, efficient, and secure AI services, ready for the demands of modern and future workloads.

Looking ahead at future reference architectures, in March 2025, DDN announced the integration of the NVIDIA AI Data Platform reference design with EXAScaler® and Infinia 2.0, alongside full support for NVIDIA Blackwell-based systems including DGX and HGX platforms. Combined with NVIDIA BlueField-3 DPUs and Spectrum-X networking, this delivers ultra-fast, end-to-end AI data pipelines that eliminate bottlenecks, maximize GPU utilization, and accelerate the deployment of agentic AI applications at scale.

Providers that can meet enterprise requirements without compromise will define the next generation of AI services. The DDN + NVIDIA reference architecture provides the foundation to do exactly that—transforming AI infrastructure into scalable, secure, profitable services Visit our website to learn more.