Executive Summary

Transform GPU Investments into Unstoppable Competitive Advantage

A global AI leader, pioneering breakthroughs at massive scale, selected Infinia as the only storage platform capable of revolutionizing their model development cycles. After rigorously testing every available solution, the company found that conventional storage platforms caused catastrophic GPU inefficiencies – costing thousands of dollars per idle minute. In stark contrast, Infinia delivers game-changing object listing speeds over 75x faster than traditional solutions, translating directly into relentless GPU productivity, vastly accelerated innovation cycles, and unparalleled market advantage.

Business-Transforming Results:

- Instantaneous Data Discovery: 75x faster object listing (30M objects/second) eradicates costly GPU idle time, driving rapid experimentation

- Unmatched GPU Utilization: >1.1TB/s model loading across 150,000 simultaneous connections ensures near 100% GPU productivity, unlocking $8M-35M+ annual savings

- Continuous Innovation: Daily ingestion of 5-6PB enables constant training on the freshest data, delivering insights days ahead of competitors

- Accelerated Time-to-Market: Dramatic reduction in model development cycles by eliminating storage-induced wait times, accelerating innovation from weeks to hours

Strategic Impact: Choosing Infinia revolutionized their AI infrastructure from the ground up. Traditional solutions imposed crippling delays of 15-30 minutes per training cycle – wasting millions annually. Infinia’s instant data availability and breakthrough capabilities transformed these bottlenecks into competitive rocket fuel, powering instant productivity, unprecedented GPU efficiency, and relentless innovation.

The Bottom Line: When every experiment costs $50,000-$500,000, storage isn’t infrastructure – it’s survival. Infinia is uniquely engineered to maximize AI competitiveness, obliterating traditional storage constraints and delivering decisive strategic advantage.

The Challenge: Finding Storage Technology for AI Scale

Today’s AI companies – from emerging leaders with 10,000 GPUs to hyperscale operations with 100,000+ GPUs – face unprecedented storage challenges when evaluating technology options:

GPU Economics and Scale Limitations

- H100 clusters from 10,000 to 100,000+ GPUs with costs ranging from $40M to $400M+ annually

- Traditional storage inadequate for serious AI workloads at scale, with existing solutions unable to deliver the unified capabilities and scale requirements

- Infinia eliminates the architectural complexity that constrains AI development velocity

The Infinia Solution: Purpose-Built for AI Excellence

This customer selected Infinia after comprehensive evaluation of all available storage technologies, recognizing that Infinia could deliver the scale and capabilities required for AI workloads at this magnitude.

Advanced Architecture for Massive AI Scale

- Capable of scaling from 10PB to 150PB+ supporting 10,000 to 100,000+ GPUs

- AI-optimized capabilities that traditional systems cannot match

- Single platform scalability that eliminates the multi-system complexity other solutions require

- Purpose-built features delivering results unavailable with other technologies

AI Workload Optimization

- Advanced capability for multimodal data at PB scale that traditional storage cannot handle efficiently

- High-throughput ingestion from GB to multi-PB daily without the degradation of legacy solutions

- Exceptional bandwidth up to 1.1TB/s+ that other platforms cannot deliver at this scale

- Instant model switching capabilities enabled by Infinia’s architecture

The Environment

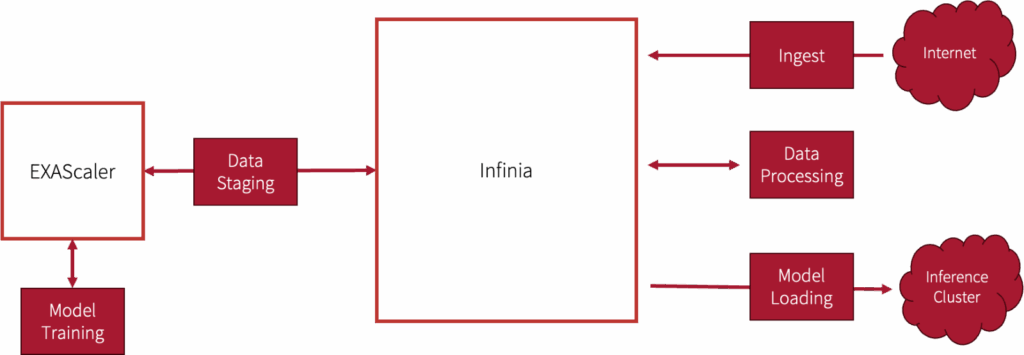

AI Workflow

- Data Ingest

- Downloading Internet (web pages, video, etc.) requires hundreds of petabytes of storage, which Infinia can organize in a single unified system

- Competitive solutions that can’t scale impose complex and costly workarounds to accommodate limitations

- Data Processing

- Infinia interoperates with Spark/Hadoop, PyTorch, TensorFlow and a variety of ML frameworks through its low-latency S3 interface

- Customers can leverage diverse applications and frameworks to accelerate time to market for AI solutions

- Model Training

- Read training data from EXAScaler and from Infinia

- Write LLM checkpoints to EXAScaler

- Data Staging

- Transfer checkpoints from EXAScaler to Infinia for curation and model loading

- Model Loading

- Load Large Language Models to hundreds of thousands of inference GPU systems simultaneously through hundreds of high-performance S3 endpoints

Breakthrough Results: Revolutionary Object Listing Speed

Object Listing Transformation: During testing, the customer discovered traditional storage would require 15-30 minutes to locate training data, creating unacceptable GPU idle time. Infinia consistently delivers speeds more than 75x faster than traditional solutions with 450,000 objects/second single bucket and 30,000,000 objects/second aggregate throughput with sub-second data discovery – capabilities that traditional solutions cannot provide at this scale.

Model Loading Revolution: Technology evaluation revealed that alternative solutions would create critical bottlenecks with GPUs costing $40,000-300,000+ per hour sitting idle during model weight transfers. Infinia’s architecture delivers scalable throughput up to 1.1TB/s with sub-minute model loading and zero GPU idle time – results that other storage technologies cannot match.

Continuous Training Capability: Traditional storage technologies require batch processing constraints, meaning models would train on yesterday’s data. Infinia enables real-time data ingestion and immediate availability of new training data at massive scale – capabilities that are not available with legacy storage solutions.

Business Impact: Competitive Advantage Only Infinia Enables

GPU Utilization Optimization: During evaluation, the customer modeled that traditional storage would result in 20-25% GPU idle time due to storage bottlenecks. Infinia achieves under 3% idle time from day one – delivering $8M-35M+ annually in GPU utilization advantages that legacy storage solutions cannot match.

Infrastructure Advantage: Rather than building on multiple storage systems that traditional approaches would require, the customer selected Infinia’s unified platform, avoiding $2M-8M+ annually in complexity and operational overhead that other solutions cannot eliminate at this scale.

Innovation Velocity: Testing showed traditional storage would limit them to 3-4 training experiments per month. Infinia’s breakthrough advantages enable 15-20 experiments monthly – competitive benefits that are not possible with legacy storage solutions.

Operational Velocity

- Faster Time-to-Market: Accelerated model loading enables quicker deployment of updates

- High-Velocity Business Model: Storage capabilities directly support rapid iteration cycles

- Competitive Advantage: Data operations efficiency translates to faster innovation cycles

Operational Efficiency

- Managed Service Value: Hands-off operation managed by storage experts

- Reduced Engineering Overhead: Teams focus on core AI/ML capabilities instead of infrastructure

- Simplified Architecture: Single storage system eliminates complex multi-system management

Business-Critical Reliability

- Proactive Support: Call home functionality enables DDN to resolve issues before customer awareness

- Advanced Telemetry: Comprehensive monitoring and observability at massive scale

- Proven Stability: Validated operations under unprecedented load conditions

Total Economic Impact Comprehensive financial modeling during technology selection showed that traditional alternatives would impose significant hidden costs through GPU inefficiency and operational complexity that Infinia eliminates:

| Cost Category | Annual Savings Range | 3-Year Total |

| GPU Efficiency Gains | $8M – $35M+ | $24M – $105M+ |

| Infrastructure Simplification | $2M – $8M+ | $6M – $24.6M+ |

| Operational Efficiency | $0.3M – $6M+ | $$1M – $18M+ |

| Competitive Velocity Premium | $17M – $267M+ | $50M – $800M+ |

| Total Annual Value | $27M – $316M+ | $80M – $929M+ |

Cost savings scale with infrastructure size: lower ranges for 10,000 GPU deployments, higher ranges for 100,000+ GPU environments

3-Year Value Advantage Over Traditional Storage: $80M-929M+ ROI versus traditional storage alternatives

Why Infinia Works for Leading AI Companies

Technical Leadership: Comprehensive evaluation revealed that Infinia meets the AI requirements at this scale. Object listing speeds regularly exceeding traditional storage represent transformational capability that traditional storage technologies cannot deliver.

Scale Capability: Infinia handles the massive scale requirements of serious AI development. Total cost of ownership analysis showed that while traditional storage may appear cost-effective initially, the GPU efficiency gains and operational requirements make Infinia the economically viable choice for large-scale AI operations.

Strategic Advantage: Infrastructure choices are competitive advantages in AI, and Infinia provides the foundation for serious AI development at scale. Companies building on Infinia can iterate faster than competitors constrained by traditional storage limitations, scale beyond what legacy solutions allow, and achieve GPU economics that are not possible with other solutions.

Contact our AI infrastructure specialists to learn how Infinia can deliver the scale and operational advantages over traditional storage that your AI development requires.