Executing successfully on an AI strategy is a major task for any business. At NVIDIA’s GTC event, DDN explained how to unify organizational data, and analyze and share previously siloed data with a storage platform that delivers virtually unlimited scale and concurrency to simplify AI workflows and accelerate revenue. DDN has developed and delivered more AI data storage than any other company in the world for organizations across financial services, life sciences, automotive, manufacturing, and government. With tighter integrations into the AI ecosystem DDN makes the whole infrastructure (network, GPU, CPU) more efficient, and speeds up all aspects of the AI data lifecycle, from ingest through to deep learning and inference.

Watch now as Dr James Coomer demonstrates how these integrations between DDN storage and the AI infrastructure and workloads deliver the best in scalability and performance. This session also covers how architectures are utilizing NVIDIA® BlueField® data processing units, and GPUDirect Storage as a way to achieve maximum performance and scale in a secure data environment.

How DDN and NVIDIA Keep Data Producing Results Faster, Together

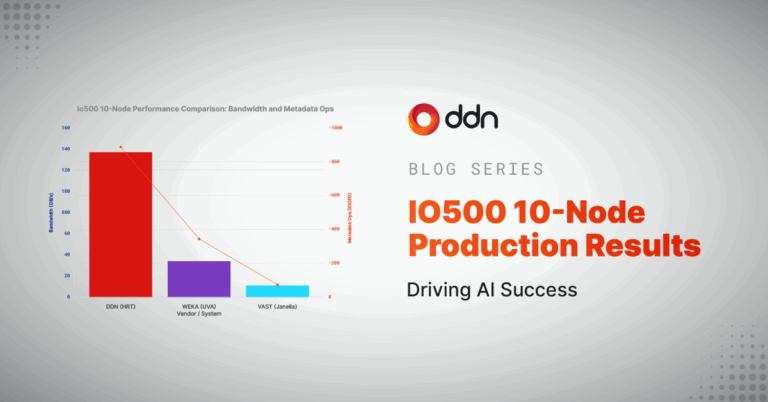

Building a hyper-efficient AI Production Platform depends upon a data storage platform that can accelerate all parts of the AI lifecycle. Many storage systems have an Achilles Heel; for example, poor write performance, ungainly management of the GPU compute environment, or limited support for the data transfer methods used by AI frameworks. These, and more areas can cripple different parts of the end-to-end pipeline, each creating a major problem for AI success. Uniquely, together DDN and NVIDIA have tested at extreme scale, with real production workloads and have built in optimizations and integrations between the AI and Data worlds to eliminate these problem areas across the whole pipeline.

How DDN and NVIDIA Keep Data Safe

AI is creating new revenue streams at an unprecedented rate, and while organizations work to extract value from data, the data is more valuable thus making it a target for potential cyber breaches.

Trying to keep large datasets secure while maintaining accessibility for authorized users can introduce a web of complexities that is challenging to solve. DDN and NVIDIA have a way to simplify AI workflows and accelerate revenue for organizations by integrating the strongest security measures, keeping costs in check, eliminating data silos and keeping configurations simple.

Here are some other things you might find interesting too:

- AI Success Guide: From data-first strategies to scalable infrastructure, our Guide provides keys to help you avoid the most common AI pitfalls and accelerate deployment.

- DDN Performance Brief: Validated performance information with NVIDIA DGX A100 systems.

- NVIDIA POD Reference Architecture: NVIDIA POD with DGX A100, DDN A3I, and NVIDIA® Mellanox® InfiniBand network switches. Go pro and get AI results fast by setting your infrastructure up to scale successfully.

- NVIDIA SuperPOD Reference Architecture: A first-of-its-kind artificial intelligence (AI) supercomputing infrastructure that delivers ground-breaking performance. This infrastructure can be deployed in weeks as a fully integrated system and is designed to solve the world’s most challenging AI problems. Think big. Move fast. Break through barriers with extreme performance and scale from the AI supercomputing experts.