Enterprise AI success demands a paradigm shift away from traditional IT. It requires a data intelligence platform designed specifically for AI large language model ingest, training, inference and GenAI, on premises and in multiclouds. It must deliver robust and secure application acceleration across namespaces and data domains, while maximizing GPU utilization and minimizing data center floorspace and power use.

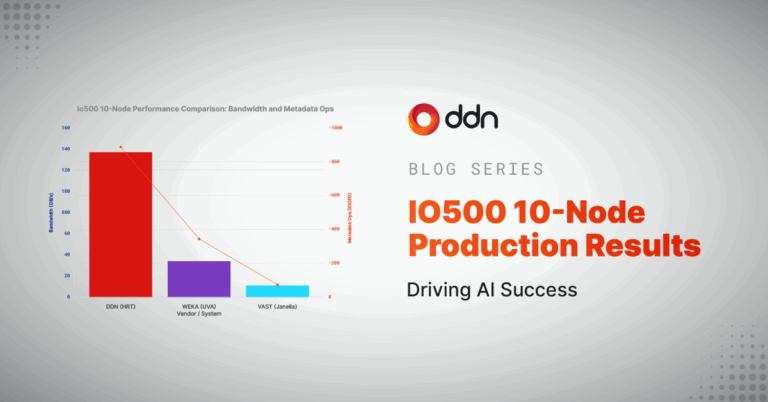

Incremental improvements to legacy storage architectures are not enough. DDN leads with a fundamentally different approach—one that powers AI for hundreds of the world’s enterprises in life sciences, financial services, automotive, manufacturing as well as cloud providers, and the most demanding AI workloads NVIDIA and xAI. As the foundation for NVIDIA AI deployments, DDN is the trusted data intelligence platforms for driving business outcome value from AI workloads with unmatched reliability and performance.

Building maximum efficiency into our customer’s workflows is not all about raw bandwidth. It’s about orchestrating the entire AI pipeline—accelerating ingestion, optimizing preprocessing, eliminating I/O bottlenecks, including large model checkpointing, and ensuring real-time, high-speed inference. DDN’s Data Intelligence platform is the backbone of hundreds of AI environments on premises and in the cloud, powering systems like xAI’s Colossus and NVIDIA’s AI clusters.

Our expertise in developing and bringing to customers the AI data intelligence platforms which accelerates applications by orders of magnitude while maximizing data center and cloud efficiency reveals many critical truths: one of them has been touted by NVIDIA and it’s CEO Jensen Huang repeatedly: checkpointing performance makes or breaks production large scale AI Training. Whether Synchronous or Asynchronous – data must be written to storage. Scale Out NFS systems take 15-20 minutes to save language model parameters. DDN’s parallel architecture slashes this to a fraction of that time while sustaining Terabyte per second write speeds – and scales beyond multiple TB/s during peak operations. Guaranteed low latency checkpoints to consistent storage are critical because at scale deployments inevitably experience GPU and infrastructure failures. Losing days of training to insufficient checkpointing infrastructure isn’t just inefficient – it’s operationally devastating.

Recently we have seen discussion in the public domain that seem to indicate that async checkpointing is sufficient for AI workloads – and we emphatically disagree. Where it exists, async operations reduce immediate GPU idle time, but they don’t solve the underlying challenge of handling massive parallel writes from thousands of GPUs. DDN’s platform doesn’t just postpone the bottleneck – it eliminates it entirely.

Our Data Intelligence architecture transcends basic storage metrics to deliver true production AI capabilities:

- 100x acceleration in search performance for large scale data preprocessing

- Raw data processing at over 3 Terabyte per second per rack

- Fast Indexing rates that double the throughput per system

AI isn’t just about training— Data preprocessing and real-time inference are now a limiting factor for many organizations. Data Scientists must label, normalize, munge massive datasets. Models must update instantly while handling thousands of inference requests per second with sub-millisecond latency. Traditional architectures break under this demand. DDN’s data intelligence platform sustains real-time responsiveness, scaling inference workloads across industries. AI leaders, including hundreds of global enterprises, NVIDIA, xAI’s Colossus, rely on DDN to safely maximize business value from AI at any scale, without adversely impacting their existing IT infrastructure.

With close to 300% in AI business growth in 2024, 500,000+ NVIDIA GPUs supercharged by DDN, and a $300 Million investment by Blackstone at a $5 Billion valuation, DDN is redefining AI data intelligence.

The above translates to much needed AI business value and a very compelling ROI:

- Training efficiency: Reducing model training time by 30% -> shorten time to release model

- Storage cost optimization: Cutting costs by 30-40% with intelligent data placement -> reduce hardware costs, reduce complexity and data center costs

- GPU utilization: Improving efficiency from 60-65% to 80-85% -> optimize capital expenditure (less GPUs) and reduce power needs

- Checkpoint acceleration: Enabling 10x faster operations -> eliminate training choke points

- Inference optimization: Lowering latency by 40-60% -> many more concurrent users supported

Scale-out NFS with S3 support reveals critical limitations: IOPS capped at 100K ops/second, high latency spikes during metadata operations, and poor handling of embedding lookups. These limitations cut concurrent user support by 50% and create GPU stalls that cripple production deployments. You need high performance S3 and POSIX capabilities integrated in a single platform.

The future of AI infrastructure isn’t about incremental storage improvements – it’s about transforming how data, compute, and intelligence interact. DDN delivers this future today through a platform that sets new standards for scalability, efficiency, and security in AI operations.

Join us on February 20th for Beyond Artificial, where we’ll unveil our next breakthrough in AI data intelligence that will reshape how organizations build and deploy AI at scale.